By: David Danks, Carnegie Mellon University

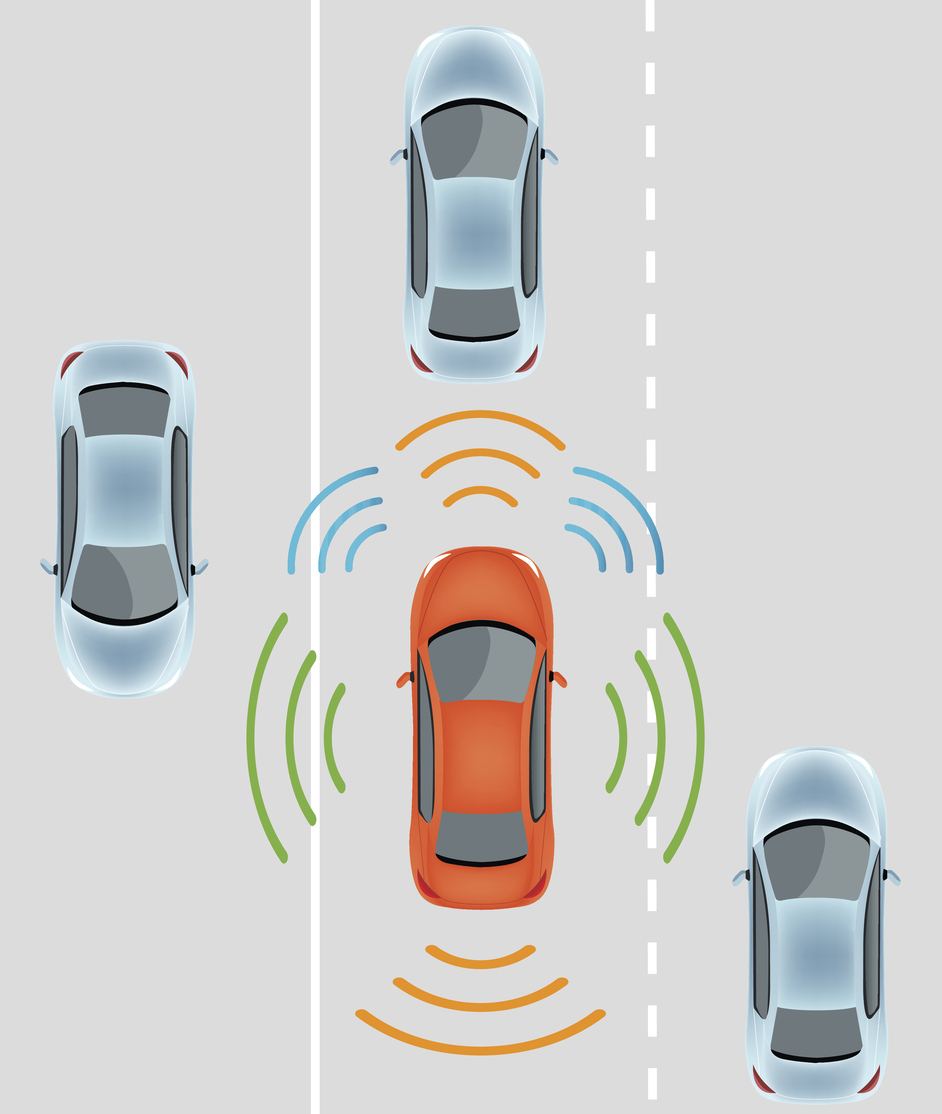

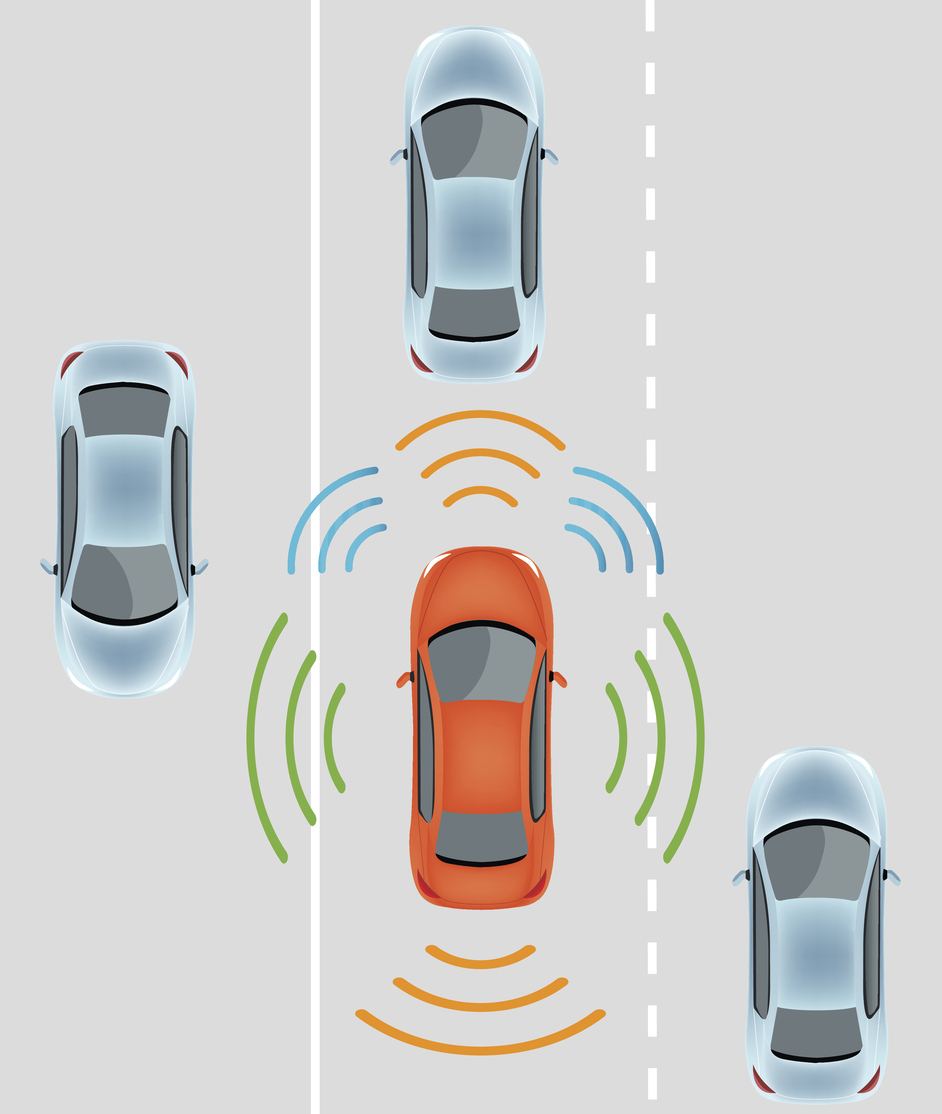

In 2016, self-driving cars went mainstream. Uber’s autonomous vehicles became ubiquitous in neighborhoods where I live in Pittsburgh, and briefly in San Francisco. The U.S. Department of Transportation issued new regulatory guidance for them. Countless papers and columns discussed how self-driving cars should solve ethical quandaries when things go wrong. And, unfortunately, 2016 also saw the first fatality involving an autonomous vehicle.

In 2016, self-driving cars went mainstream. Uber’s autonomous vehicles became ubiquitous in neighborhoods where I live in Pittsburgh, and briefly in San Francisco. The U.S. Department of Transportation issued new regulatory guidance for them. Countless papers and columns discussed how self-driving cars should solve ethical quandaries when things go wrong. And, unfortunately, 2016 also saw the first fatality involving an autonomous vehicle.

Autonomous technologies are rapidly spreading beyond the transportation sector, into health care, advanced cyberdefense and even autonomous weapons. In 2017, we’ll have to decide whether we can trust these technologies. That’s going to be much harder than we might expect.

Trust is complex and varied, but also a key part of our lives. We often trust technology based on predictability: I trust something if I know what it will do in a particular situation, even if I don’t know why. For example, I trust my computer because I know how it will function, including when it will break down. I stop trusting if it starts to behave differently or surprisingly.

In contrast, my trust in my wife is based on understanding her beliefs, values and personality. More generally, interpersonal trust does not involve knowing exactly what the other person will do – my wife certainly surprises me sometimes! – but rather why they act as they do. And of course, we can trust someone (or something) in both ways, if we know both what they will do and why.

I have been exploring possible bases for our trust in self-driving cars and other autonomous technology from both ethical and psychological perspectives. These are devices, so predictability might seem like the key. Because of their autonomy, however, we need to consider the importance and value – and the challenge – of learning to trust them in the way we trust other human beings.

Autonomy and predictability

We want our technologies, including self-driving cars, to behave in ways we can predict and expect. Of course, these systems can be quite sensitive to the context, including other vehicles, pedestrians, weather conditions and so forth. In general, though, we might expect that a self-driving car that is repeatedly placed in the same environment should presumably behave similarly each time. But in what sense would these highly predictable cars be autonomous, rather than merely automatic?

(more…)

In 2016, self-driving cars went mainstream. Uber’s autonomous vehicles

In 2016, self-driving cars went mainstream. Uber’s autonomous vehicles  The

The